Measurement Invariance

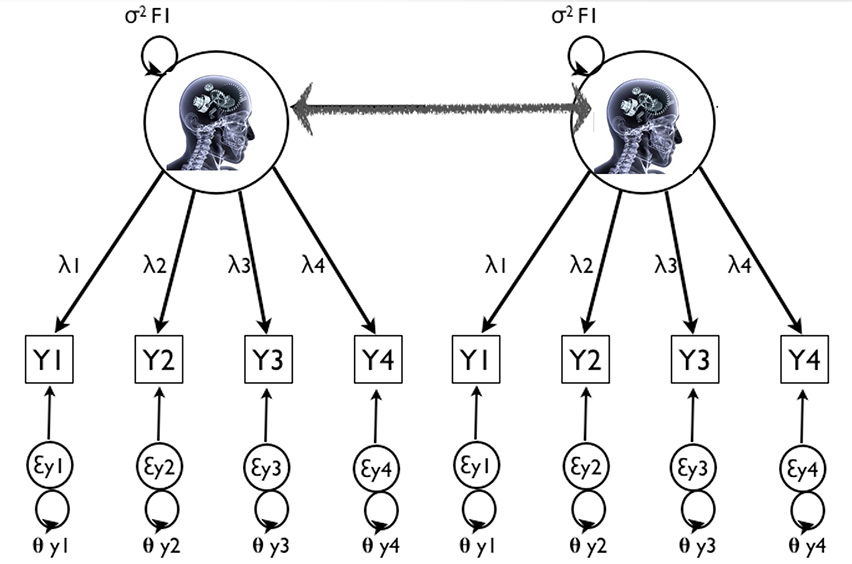

To assess the presence of abstract concepts in people, like prolonged grief disorder, peritraumatic dissociation and students’ motivation, surveys can be used. When concepts are operationalized by means of questions or “items”, they are called “latent variables”. One assumption when comparing such latent variables across groups or over time, is the assumption of Measurement Invariance.

Often, multi-item surveys typically have a group-component and/or a time-component. A study could incorporate a comparison between groups of individuals, for example, across countries in Europe (Hox et al., 2012). With regards to time, a person’s score on a factor can also be evaluated multiple times, for example, the level of traumatic stress of soldiers before, during and after deployment to Afghanistan (e.g. Lommen et al, 2014).

The average scores per person, the latent factor means, and relations between such latent variables can only be meaningfully compared, when measurement structure, i.e. the relation between the items and the construct, are stable across groups and/or over time. When this assumption is met, one can speak of “measurement invariance” (Van de Schoot et al, 2012). For empirical examples in which invariance testing has been applied, see for example: The Stability of Problem Behavior Across the Preschool Years (Basten et al., 2016); a cross-cultural comparison of Meaning-in-life orientations and values in youth (Gorlova et al., 2012); a cross-disorder symptom analysis in Gilles de la Tourette syndrome patients and family-members (Huisman-Van Dijk et al., 2016); a comparison of the Illness Invalidation Inventory across language, rheumatic disease, and gender (Kool et al., 2014); a multigroup comparison of the Ethnic Identity Measure (MEIM) across Bulgarian, Dutch and Greek samples (Mastrotheodoros et al., 2012); a comparison of the Satisfaction With Life Scale across immigrant groups (Ponizovsky et al., 2013); a comparison of the Emotion Regulation Questionnaire across countries (Sala et al., 2012); a comparison of the Friends and Family Interview across Belgium and Romania Stievenart et al., 2012; a comparison of the Burn Specific Health Scale-Brief across European countries Van Loey et al., 2013; a comparison of the Youth Self-Report internalizing syndrome scales among immigrant adolescents and over time (Verhulp et al., 2014).

Recent developments in statistics have provided new analytical tools for assessing measurement invariance (MI), including the option of Approximate Measurement Invariance. Bengt Muthén and Tihomir Asparouhov (2012) have described a novel method where, using Bayesian Structural Equation Models (BSEM), exact zero constraints can be replaced with approximate zero constraints based on substantive theories. For example, the cross-loadings in confirmatory factor analysis, the indicators expressing how items and factors are related to another, are traditionally constrained to be zero. Muthén and Asparouhov’s procedure allows for some ‘wiggle room’: very small, but non-zero cross-loadings. This novel possibility of approximate zero constraints is a promising alternative to the usage of exact zeros which has been proven to be unrealistic at times (see for example Lommen et al, 2014). Approximate MI is another area in which approximate zeros might have an advantage: when full MI appears to be too strict (e.g. on some items one group consistently scores better than the other group) some ‘wiggle’ room in factor loadings or intercepts across groups can be allowed for making the model fit well.

Ongoing

Comparing models with different priors, i.e. certain initial values that allow for the approximately non-zero solutions suggested by Muthén et al, should not be handled using the ppp-value or the DIC because these tools are not suited to compare models with different priors. Instead, we suggest the Prior-Posterior Predictive P-value as an alternative. This Prior-Posterior Predictive P-value is able to compare different models containing different prior settings. The next step is to embed this p-value into existing software, e.g. Mplus and Blavaan. We are currently working on this implementation and preparing exercises on the Prior-Posterior Predictive P-value for teaching purposes. As soon as more information becomes available it will be posted on the blog.

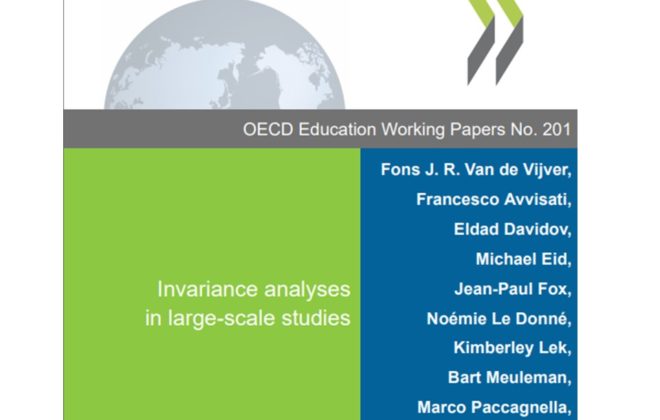

Currently Rens is part of an expert group chaired by Fons van den Vijver (Tilburg University) in the Organisation for Economic Cooperation and Development (OECD). So far, each expert group in the OECD has dealt independently with the issue of measurement invariance. Now the plan is to come up with a common framework and a state-of-the-art overview of contemporary knowledge on this topic in the OECD. Hence, Fons, Rens and others are arranging international, multidisciplinary expert meetings on measurement invariance issues, chaired by Fons. This expert group is drafting a paper that will serve as a reference on appropriate methodological choices for all OECD teams. Objectives and rational can be found in the word file. This part of the project is financially supported by the OECD.

Completed

The special journal issue on measurement invariance started off with a kick-off meeting with all potential contributors sharing potential paper ideas. After the kick-off meeting the authors submitted their papers, all of which were reviewed by experts in the field. The papers in the eBook are listed in alphabetical order; in the editorial the papers are introduced thematically. The expert workshop was organized at Utrecht University in The Netherlands and funded by the Netherlands Organization for Scientific Research (NWO-VENI-451-11-008).

The special issue on MI included the results of a project conducted in Rens’ research group. This entailed the very first simulation study of approximate measurement invariance in collaboration with, among several others, Bengt Muthen. The open access fee for publication was supported by a grant provided by the Netherlands Organization for Scientific Research.

As part of his presidency of the Early Research Union (ERU) of the European Association of Developmental Psychology (EADP), Rens organized a writing week for young scholars. The goal of the event was to finalize joined papers on cross-national comparisons of varying instruments and submit those to the European Journal of Developmental Psychology, Special Section Developmetrics, Instruments and Procedures for Developmental Research. This project received financial support from the EADP for the writing week.

Kimberley works together with Rens on how educational and psychological tests can be improved with new and existing statistical tools. One project focusses, for instance, on how (un)certainty in the test results of individual examinees can be estimated and expressed, to ...