Teacher knows best?

In this dissertation, we focused on two alternative approaches to evaluate the hypothesis of interest more directly, i.e. informative hypothesis testing and model selection using order-restricted information criteria.

Alternative Information: Bayesian Statistics, Expert Elicitation and Information Theory

In this dissertation, we focused on two alternative approaches to evaluate the hypothesis of interest more directly, i.e. informative hypothesis testing and model selection using order-restricted information criteria.

Bayesian versus Frequentist Estimation for SEM: A Systematic Review

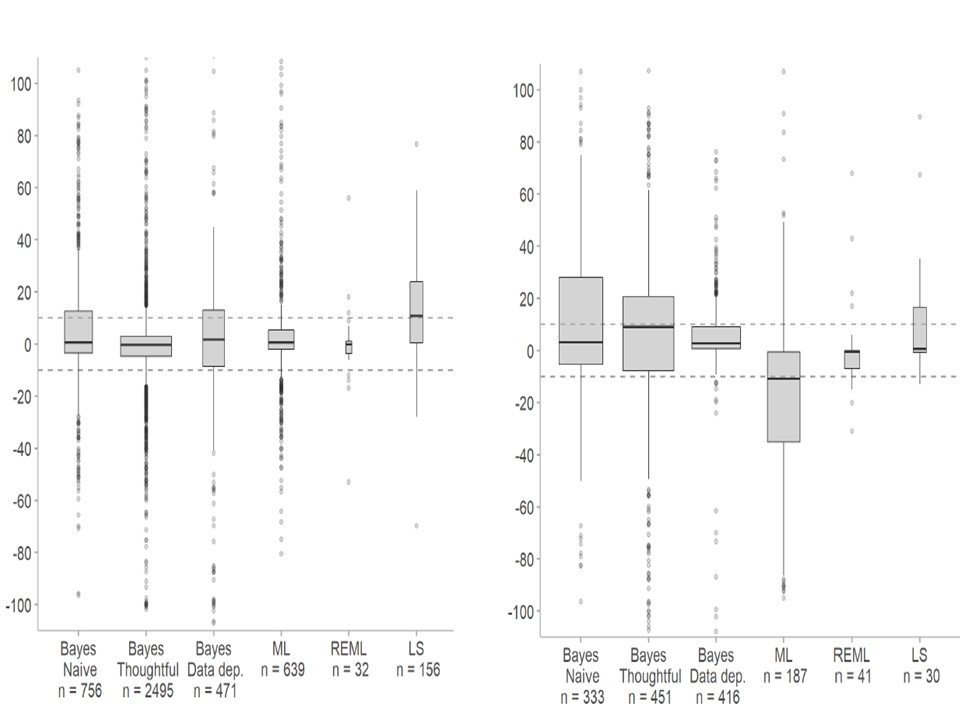

The Performance of Maximum Likelihood and Bayesian Estimation With Small and Unbalanced Samples in a Latent Growth Model is compared in a simulation study

Small Sample Size Solutions: A Guide for Applied Researchers and Practitioners.

This unique resource provides guidelines and tools for implementing solutions to issues that arise in small sample research, illustrating statistical methods that allow researchers to apply the optimal statistical model for their research question when the sample is too small.

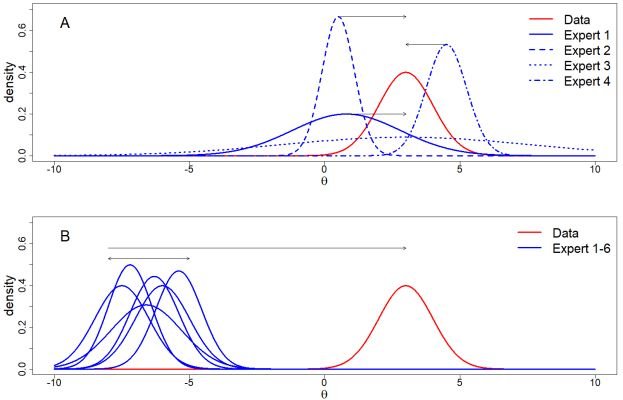

Dealing with imperfect elicitation results

We provide an overview of the solutions we used for dealing with imperfect elicitation results, so that others can benefit from our experience. We present information about the nature of our project, the reasons for the imperfect results, and how we resolved these sup-ported by annotated R-syntax

Predicting a Distal Outcome Variable From a Latent Growth Model

The aim of the current simulation study is to examine the performance of an LGM with a continuous distal outcome under maximum likelihood (ML) and Bayesian estimation with default and informative priors, under varying sample sizes, effect sizes and slope variance values.

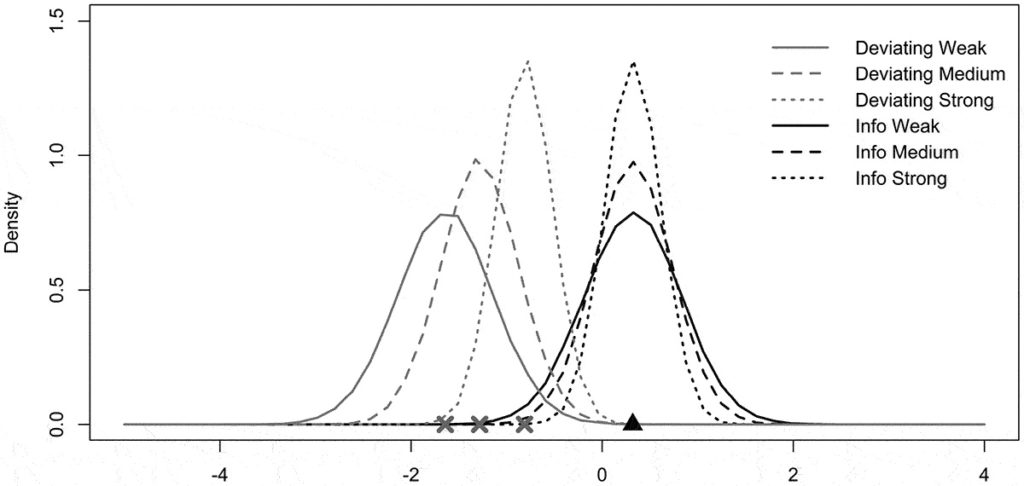

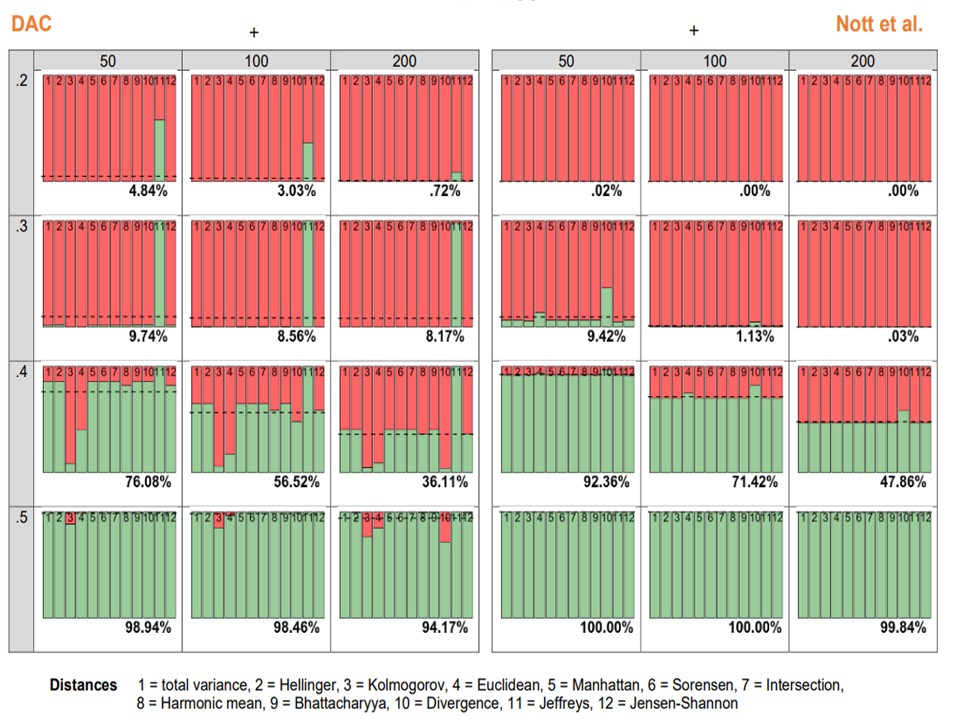

Choice of Distance Measure Influences the Detection of Prior-Data Conflict

The present paper contrasts two related criteria for the evaluation of prior-data conflict: the Data Agreement Criterion (DAC; Bousquet, 2008) and the criterion of Nott et al. (2016). We investigated how the choice of a specific distance measure influences the detection of prior-data conflict.

Pushing the Limits

The Performance of Maximum Likelihood and Bayesian Estimation With Small and Unbalanced Samples in a Latent Growth Model is compared in a simulation study

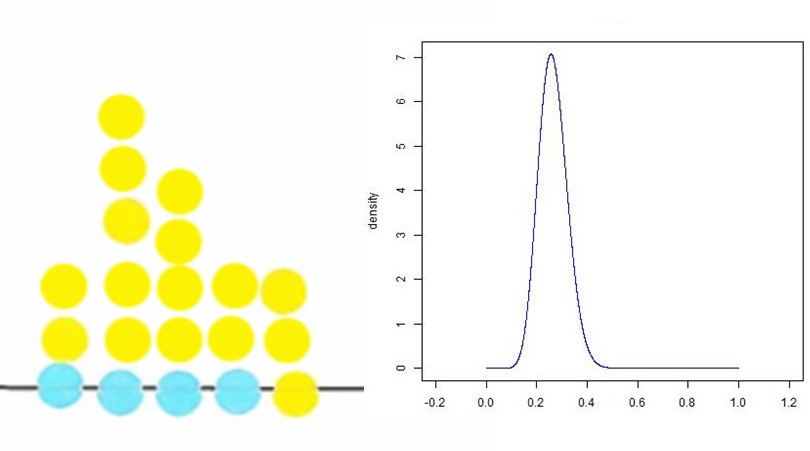

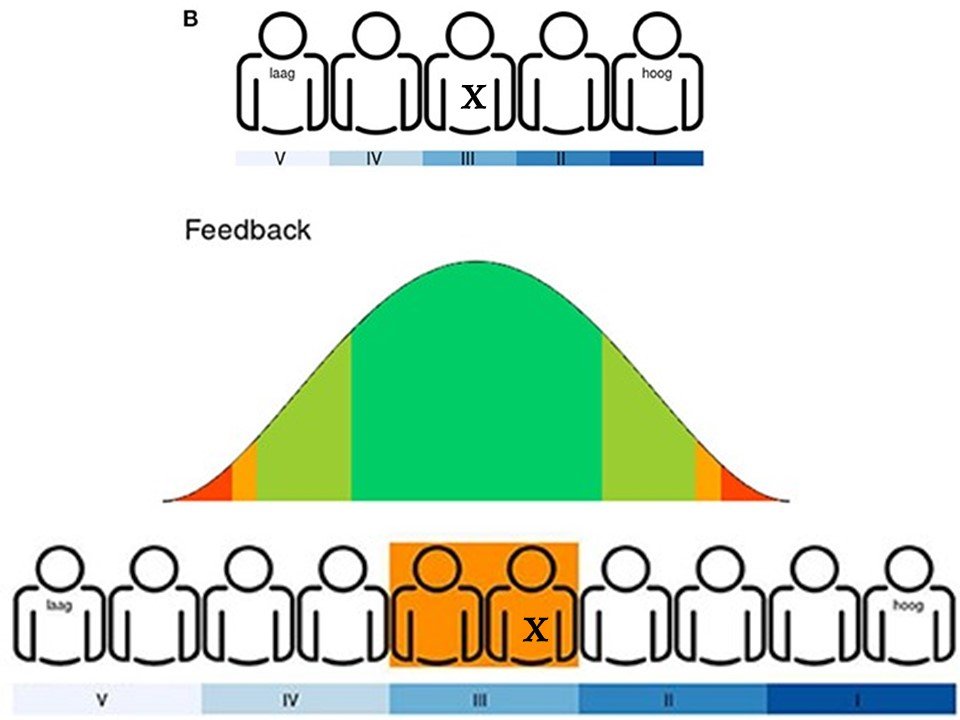

Development and Evaluation of a Digital Expert Elicitation Method

The paper describes an elicitation procedure which opens up many opportunities to investigate tacit differences between “subjective” teacher judgments and “objective” data such as test results and to raise the diagnostic competence of teachers.

Testing Small Variance Priors Using Prior-Posterior Predictive P-values

Muthen and Asparouhov (2012) propose to evaluate model fit in structural equation models based on approximate (using small variance priors) instead of exact equality of (combinations of) parameters to zero. This is an important development that adequately addresses Cohen’s (1994) “The earth is round (p < .05)”, which stresses that point null-hypotheses are so precise that small and irrelevant differences from the null-hypothesis may lead to their rejection.

Using the Data Agreement Criterion to Rank Experts’ Beliefs

We evaluated priors based on expert knowledge by extending an existing prior-data (dis)agreement measure, the Data Agreement Criterion, and compare this approach to using Bayes factors to assess prior specification.

Statistical Methods for Small Data

After the publication of our paper Bayesian PTSD-Trajectory Analysis with Informed Priors Based on a Systematic Literature Search and Expert Elicitation in Multivariate Behavioral Research, I was contacted by Scientia whether I was interested in an outreach publication based on our paper. Since I had funding for this…